Countries around the world are investing vast sums of money to build ever larger telescopes on the ground and in space, such as the Euclid Space Telescope recently launched by the European Space Agency ESA. These and other facilities collect impressive amounts of data on galaxies, quasars, and stars. Simulations such as FLAMINGO play a key role in the scientific interpretation of the data by connecting predictions from theories of our universe to the observed data.

According to the theory, the properties of our entire universe are set by a few numbers called 'cosmological parameters' (six of them in the simplest version of the theory). The values of these parameters can be measured very precisely in various ways. One of these methods relies on the properties of the cosmic microwave background radiation, the thermal radiation left over from the Big Bang. However, these values do not match those measured by other techniques that rely on the way in which the gravitational force of galaxies bends light. These ‘tensions’ could signal the demise of the standard model of cosmology – the cold dark matter model.

The computer simulations may be able to reveal the cause of these tensions because they can inform scientists about possible biases (systematic errors) in the measurements. If none of these prove sufficient to explain away the tensions, the theory will be in real trouble.

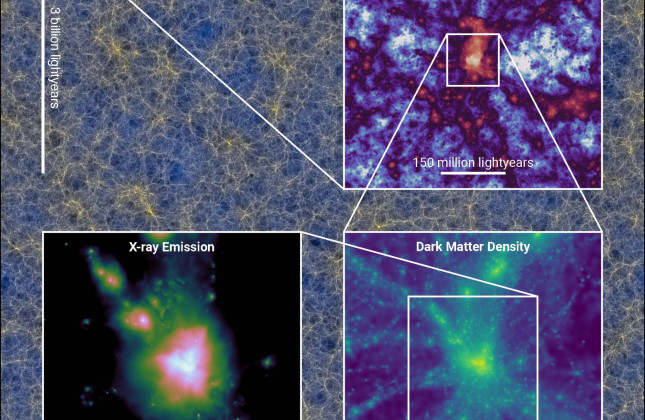

So far, the computer simulations used to compare to the observations only track cold dark matter. 'Although the dark matter dominates gravity, the contribution of ordinary matter can no longer be neglected,' says research leader Joop Schaye (Leiden University), 'since it could be similar to the deviations between the models and the observations.' The first results show that both neutrinos and ordinary matter are essential for making accurate predictions, but do not eliminate the tensions between the different cosmological observations.

Ordinary matter and neutrinos

Simulations that also track ordinary, baryonic matter are much more challenging and require much more computing power. This is because ordinary matter - which makes up only sixteen per cent of all matter in the universe - feels not only gravity but also gas pressure, which can cause matter to be blown out of galaxies by active black holes and supernovae far into intergalactic space. The strength of these intergalactic winds depends on explosions in the inter stellar medium and is very difficult to predict. On top of this, the contribution of neutrinos, subatomic particles of very small but not precisely known mass, is also important but the motion of neutrinos has also not been simulated so far.

Method

The astronomers have completed a series of computer simulations tracking structure formation in dark matter, ordinary matter, and neutrinos. PhD student Roi Kugel (Leiden University) explains: 'The effect of galactic winds was calibrated using machine learning, by comparing the predictions of lots of different simulations of relatively small volumes with the observed masses of galaxies and the distribution of gas in clusters of galaxies.'

The researchers simulated the model that best describes the calibration observations with a supercomputer in different cosmic volumes and at different resolutions. In addition, they varied the parameters of the model, including the strength of galactic winds, the mass of neutrinos, and the cosmological parameters in simulations of slightly smaller but still large volumes.

The largest simulation uses 300 billion resolution elements (particles with the mass of a small galaxy) in a cubic volume with edges of ten billion light years. This is the largest cosmological computer simulation with ordinary matter ever completed. Matthieu Schaller (Leiden University): 'To make this simulation possible, we developed a new code, SWIFT, which efficiently distributes the computational work over 30 thousand CPUs.'

Follow-up research

The FLAMINGO simulations open a new virtual window on the universe that will help make the most of cosmological observations. In addition, the large amount of (virtual) data creates opportunities to make new theoretical discoveries and to test new data analysis techniques, including machine learning. Using machine learning, astronomers can then make predictions for random virtual universes. By comparing these with large-scale structure observations, they can measure the values of cosmological parameters. Moreover, they can measure the corresponding uncertainties by comparing with observations that constrain the effect of galactic winds.

Scientific papers

The FLAMINGO project: cosmological hydrodynamical simulations for large-scale structure and galaxy cluster surveys, Joop Schaye et al.

FLAMINGO: Calibrating large cosmological hydrodynamical simulations with machine learning, Roi Kugel et al.

The FLAMINGO project: revisiting the S8 tension and the role of baryonic physics, Ian McCarthy et al.

Website

Website FLAMINGO-project met afbeeldingen, video’s en interactieve visualisaties.